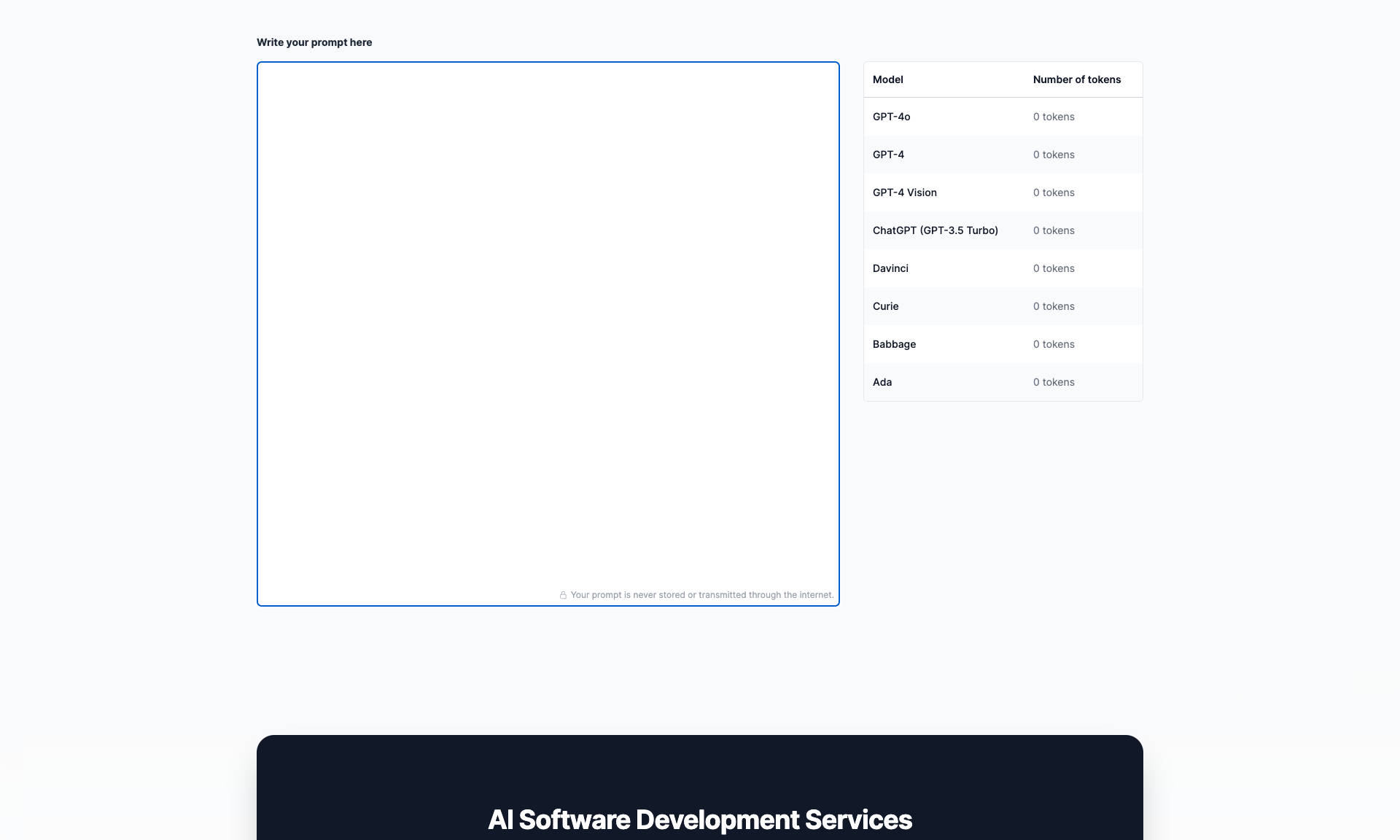

What is Online Prompt Token Counter?

It is an essential tool for managing and optimizing the use of tokens when interacting with OpenAI models. By accurately counting tokens in your input prompts, it ensures that you remain within the model's token limit.

Tracking tokens assists in maintaining the cost-effectiveness of interactions with AI models like GPT-3.5. Token limits can impact the cost, performance, and feasibility of your requests. This tool helps you stay efficient and avoid unnecessary expenses.

Token counting also aids in better response management. You can adjust and refine your prompts to ensure concise communication and adherence to the specified context, improving the quality of interactions.